Introduction

So GitLab’s container registry went down yesterday. Even though I consider Gitlab’s service reliable, it reminded me that I really should practice what I preach and setup an additional private image registry for the sake of redundancy. Google’s Container Registry was the first one that came to mind.

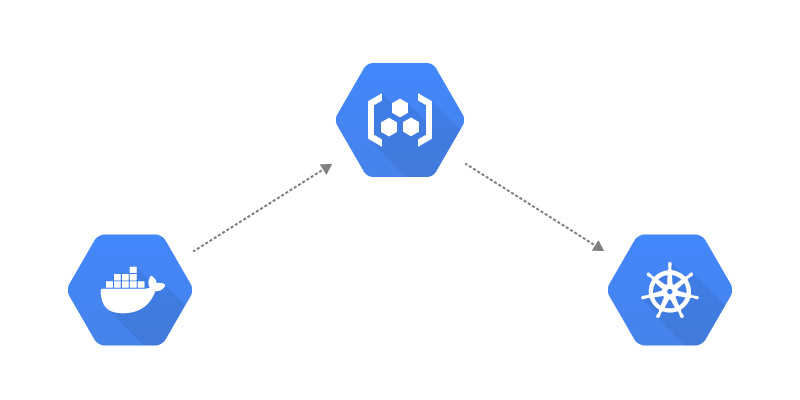

However I discovered that pushing/pulling container images to/from Google’s Container Registry (GCR) isn’t as straight forward as other registries. Google doesn’t provide a simple password token for authentication with the docker login command. This tutorial covers how to configure authentication with GCR from Kubernetes or Docker.

Prerequisites

- Google Cloud Platform Account

- Knowledge of Kubernetes & Docker

- Basic Linux Knowledge

- Command Line tools installed (kubectl, Google Cloud SDK)

Step 1 - Enable the Container Registry API

Run the following command to enable the Container Registry API on your project.

Step 2 - Create a new Service Account

Google uses Service Accounts to allow applications to make API calls to services running on GCP.

Set some variables for your Google Project ID (not name) and choose a name for the Service Account you’ll create. These variables will be used in commands throughout the rest of this tutorial:

Run the command below to create a new Service Account (For the purpose of this tutorial I set my $ACCOUNT_NAME to gcr-push-pull).

The above Service Account creation command will generate an associated email which is required for the next step. So run the following command and store the email as a variable (e.g. $ACCOUNT_EMAIL)

Step 3 - Grant the Service Account permissions

Google Container Registry actually leverages their Cloud Storage service by storing images in buckets. In order to push/pull images to/from the Registry, permissions need to be granted to the Service Account to access the bucket used by the Registry.

The following command grants admin permissions to the new Service Account (This is a temporary measure in order to initiate the Container Registry bucket [if it isn’t already] - See Note below for more details)

Note: The above role (roles/storage.admin) allows the service account to administer all buckets across the whole project. However unless the Container Registry bucket responsible for storing images (named

artifacts.PROJECT-ID.appspot.comorSTORAGE-REGION.artifacts.PROJECT-ID.appspot.com) already exists, thestorage.adminrole is required to initiate this bucket by way of pushing an image to the Container Registry. See Container Registry - Granting Permissions for more details.

Step 4 - Create and Download the Service Account JSON key

A key associated with the Service Account is required by the Docker login command to authenticate with the GCR. Run the command below to create and download this key.

Warning: Keep your generated Service Account key(s) secure. For all intents and purposes they don’t expire (Dec 31st 9999)! See Best practices for managing credentials

Step 5 - Login from Docker and push an image to the Container Registry

With the key downloaded you can now login to the Container Registry.

Where <storage-region-url> is the location the storage bucket will be created in when you push images:

https://gcr.iofor registries in the US Region (May change in the future; hosted separate fromhttps://us.gcr.io)https://us.gcr.iofor registries in the US Regionhttps://eu.gcr.iofor registries in the EU Regionhttps://asia.gcr.iofor registries in the ASIA Region

e.g. To create the storage bucket in the EU region.

Using the Docker tag command, tag an existing local image according to the following syntax

e.g. Tag the busybox image

Now push this image to the GCR. This will automatically create the storage bucket in the specified region

The following command lists existing images in your Container Registry:

You can see the recently pushed image is listed.

Step 6 - Update Service Account permissions

In Step 3 we added admin permissions to the Service Account. As mentioned prior, this grants the Service Account permission to edit/delete/create buckets across the entire project! Now that the Container Registry bucket has been created we can revoke this permission and replace it with a more fine-grained bucket specific role.

If you try to push to the Registry now you’ll receive the following error informing you you don’t have the necessary permissions to do so.

You can grant the Service Account permission to access only the bucket containing your images with the following commands.

To grant the Service Account Pull only permission to the Registry, run

To grant the Service Account Push & Pull permissions to the Registry, run

Check your chosen role has been successfully added

Step 7 - Configure the Container Registry in Kubernetes

Now that the GCR has been setup we can configure Kubernetes to access it. Kubernetes deployments can pull images from private registries using the ImagePullSecrets field. This field allows you to set credentials allowing Pods to pull images from a private registry.

The first step is to create the secret (credentials) that the ImagePullSecrets field will reference in a deployment. This can be achieved a number of ways. The easiest method in my opinion is by creating a secret of type docker-registry with kubectl.

Where

exampleis the namespace in which the secret is createdgcr-iois the chosen name of the secretgcr.iois the FQDN of the private registry (in this case the GCR)_json_keyis the username for authenticating with GCR (Any value other than_json_keywill result in an error)not@val.idcan be any valid email address"$(cat key.json)"will import your Service Account key to be used by the created secret from the set path

Now that the secret has been successfully created it can be used to pull images from the GCR in a couple of ways.

First, the imagePullSecrets property can be explicitly specified in a deployment.

Example (based on the busybox image pushed to the GCR earlier):

The image field references the image location in the GCR and the imagePullSecrets -name field references the secret we created for authenticating with Google’s Container Registry before pulling the image.

Alternatively imagePullSecrets can be configured on the default service account. This allows every pod in the defined namespace to access the private GCR.

The kubectl patch command patches the default service account with the imagePullSecrets configuration

Now any deployments to the same namespace will be able to pull images from the GCR without having to specify the imagePullSecrets field in the deployment itself.